In the last weeks I’ve read a few posts about C/SIDE to AL Data Migration and as far as I understand people are struggling to have data migrated from C /SIDE to AL tables.

Data upgrade codeunits to the rescue. They exist in C/AL and exists in AL as well so you should use and abuse them.

If you ever made a data upgrade then you should be familiar with upgrade codeunits but if you don’t, please feel free to ask me for help.

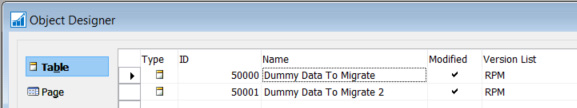

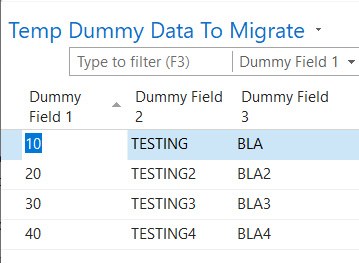

Let’s take a look at the following scenario:

I have two tables in C/AL with dummy data and I want to convert them to extensions. I need to migrate the data from the C /AL table to the new AL table.

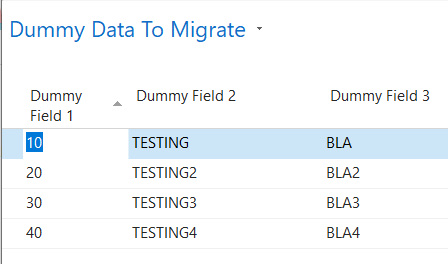

* Table 50000 – Dummy Data To Migrate

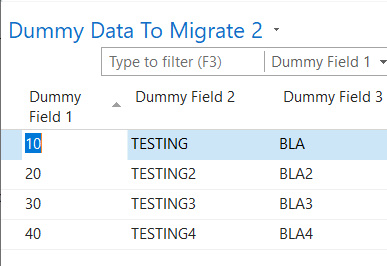

* Table 50001 – Dummy Data To Migrate 2

In table 50000 the field ids are the same. In table 50001 the ids don’t match the final table ids but the fields name do so both scenarios are very similar. I’ll show you how to handle it.

Please consider the following steps:

- Create two C/AL tables and populate them with dummy data.

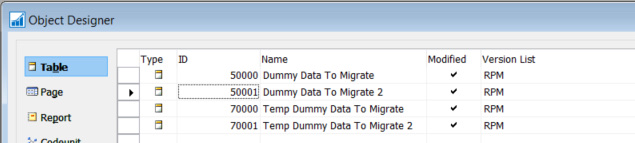

- The new AL tables will have the same table id and/or the same name so we need to create new tables to temporarily hold the data and use data migration codeunits to migrate the data from the original to the upgrade (temp) tables.

New 70000 and 70001 tables are copies of the original ones.

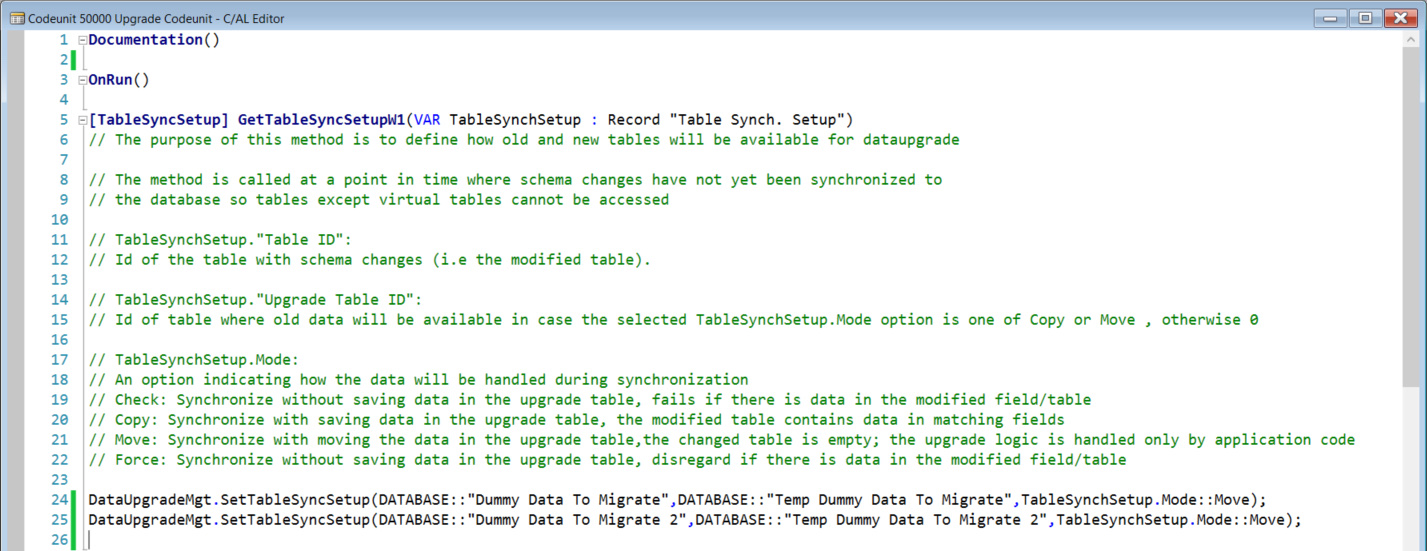

- Create a new upgrade codeunit to move the data from the original tables to the upgrade (temp) tables:

[TableSyncSetup] GetTableSyncSetupW1(VAR TableSynchSetup : Record “Table Synch. Setup”)

// The purpose of this method is to define how old and new tables will be available for dataupgrade// The method is called at a point in time where schema changes have not yet been synchronized to

// the database so tables except virtual tables cannot be accessed// TableSynchSetup.”Table ID”:

// Id of the table with schema changes (i.e the modified table).// TableSynchSetup.”Upgrade Table ID”:

// Id of table where old data will be available in case the selected TableSynchSetup.Mode option is one of Copy or Move , otherwise 0// TableSynchSetup.Mode:

// An option indicating how the data will be handled during synchronization

// Check: Synchronize without saving data in the upgrade table, fails if there is data in the modified field/table

// Copy: Synchronize with saving data in the upgrade table, the modified table contains data in matching fields

// Move: Synchronize with moving the data in the upgrade table,the changed table is empty; the upgrade logic is handled only by application code

// Force: Synchronize without saving data in the upgrade table, disregard if there is data in the modified field/tableDataUpgradeMgt.SetTableSyncSetup(DATABASE::”Dummy Data To Migrate”,DATABASE::”Temp Dummy Data To Migrate”,TableSynchSetup.Mode::Move);

DataUpgradeMgt.SetTableSyncSetup(DATABASE::”Dummy Data To Migrate 2″,DATABASE::”Temp Dummy Data To Migrate 2″,TableSynchSetup.Mode::Move);

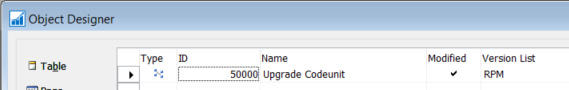

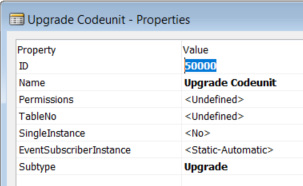

Codeunit subtype must be set to Upgrade.

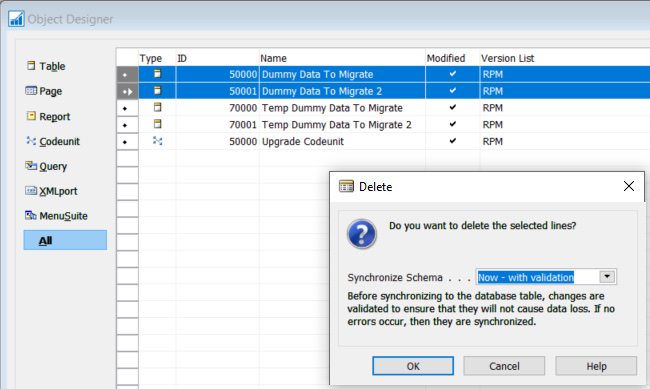

- At this moment, you will be able to delete the original tables without forcing it because a data upgrade codeunit has been created to handle and move the data.

-

Delete tables 50000 and 50001 and choose “Now – with validation” option;

- The tables are deleted without any issue;

- Run the temporary tables and check that the data has been copied from the original to the temporary tables;

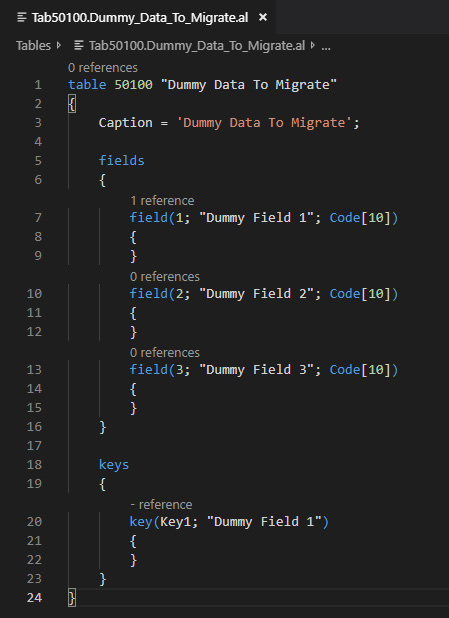

- Now create the AL extension with the two original tables. For the sake of simplicity I’ve created the following tables in AL:

table 50100 “Dummy Data To Migrate”

{

Caption = ‘Dummy Data To Migrate’;

fields

{

field(1; “Dummy Field 1”; Code[10])

{

}

field(2; “Dummy Field 2”; Code[10])

{

}

field(3; “Dummy Field 3”; Code[10])

{

}

}

keys

{

key(Key1; “Dummy Field 1”)

{

}

}

}

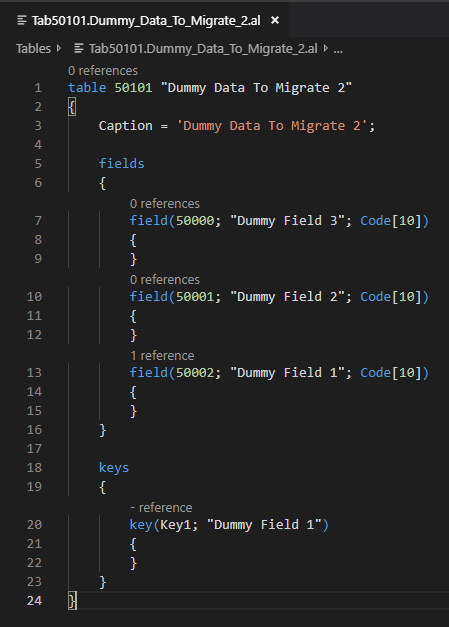

table 50101 “Dummy Data To Migrate 2”

{

Caption = ‘Dummy Data To Migrate 2’;

fields

{

field(50000; “Dummy Field 3”; Code[10])

{

}

field(50001; “Dummy Field 2”; Code[10])

{

}

field(50002; “Dummy Field 1”; Code[10])

{

}

}

keys

{

key(Key1; “Dummy Field 1”)

{

}

}

}

Please note that in “Dummy Data To Migrate 2” table the field ids are not equal and are sorted differently.

- Publish the extension;

- To migrate the data from the temporary tables to the extension tables, create a data upgrade codeunit in AL:

codeunit 50100 “Data Upgrade Codeunit”

{

Subtype = Upgrade;

trigger OnRun()

begin

end;

trigger OnUpgradePerCompany()

begin

DummyTable1_DataCopy;

DummyTable2_DataCopy;

end;

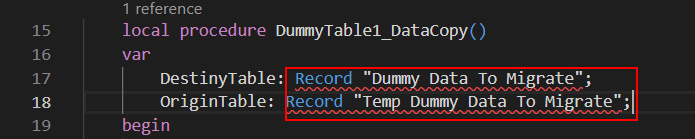

local procedure DummyTable1_DataCopy()

var

DestinyTable: Record “Dummy Data To Migrate”;

OriginTable: Record “Temp Dummy Data To Migrate”;

begin

With OriginTable do begin

if FindSet() then

repeat

DestinyTable.Init();

DestinyTable.Transferfields(originTable);

DestinyTable.Insert;

until next = 0;

//DeleteAll();

end;

end;

local procedure DummyTable2_DataCopy()

var

DestinyTable: Record “Dummy Data To Migrate 2”;

OriginTable: Record “Temp Dummy Data To Migrate 2″;

begin

With OriginTable do begin

if FindSet() then

repeat

DestinyTable.Init();

DestinyTable.”Key Field 1″ := OriginTable.”Key Field 1″; // Add all the key fields.

DestinyTable.”Dummy Field 1″ := OriginTable.”Dummy Field 1″;

DestinyTable.”Dummy Field 2″ := OriginTable.”Dummy Field 2″;

DestinyTable.”Dummy Field 3″ := OriginTable.”Dummy Field 3”;

DestinyTable.Insert;

until next = 0;

//DeleteAll();

end;

end;

}

i. Two issues should arise:

1) VSCode cannot find “Dummy Data To Migrate” nor “Dummy Data To Migrate 2” tables.

To fix this, you must set dependencies with the extension created earlier.

In the app.json, setup the dependencies:

“dependencies”: [

{

“appId”: “6fbcc681-a68a-46bc-ab7c-87c7434fdd06”,

“name”: “DataMigrationArticle”,

“publisher”: “Default publisher”,

“version”: “1.0.0.0”

}

],

2) VSCode cannot find “Temp Dummy Data To Migrate”.

This is related with the symbols generation because this table was created in C/AL and must be accessed in AL. Please refer to the following website:

You need to run the Microsoft Dynamics NAV Development Environment with the generatesymbolreference flag set as true and compile both 70000 and 70001 tables.

finsql.exe generatesymbolreference=yes

Please let me know if you have any doubts.

3) Download the symbols again in VS Code after following the previous steps and you should have no errors in the project.

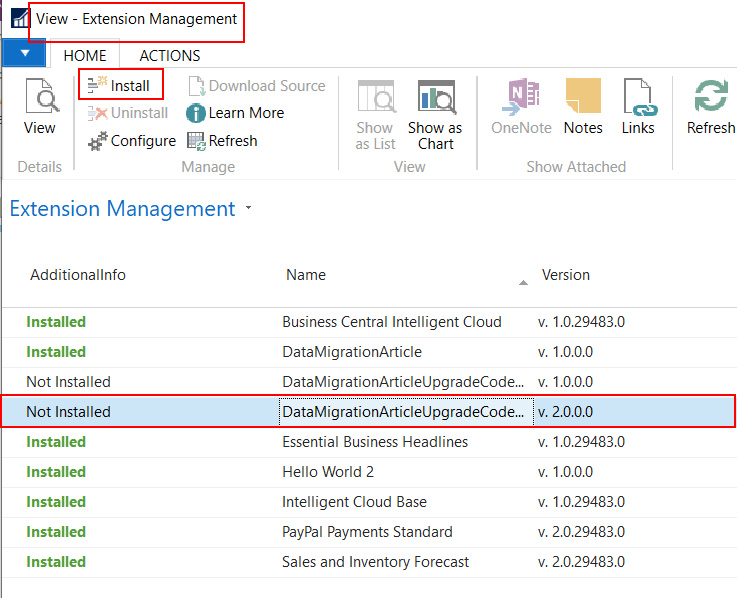

- Publish the upgrade extension and install it.

I’ve packaged the extension in VSCode and used Powershell to publish and install it but you can do it directly from VSCode if you want it.

To do it in Powershell run the commands below.

Don’t forget to update the path, server instance and file names to match yours.

□ Import-Module “C:\Program Files\Microsoft Dynamics 365 Business Central\130\Service\NavAdminTool.ps1” –force

□ Publish-NAVApp -ServerInstance W1_NAVBC_CU5 -Path “C:\…\AL\DataMigrationArticleUpgradeCodeunit\Default publisher_DataMigrationArticleUpgradeCodeunit_1.0.0.0.app” -SkipVerification

□ Sync-NAVApp -ServerInstance W1_NAVBC_CU5 -Name DataMigrationArticleUpgradeCodeunit

□ Install-NAVApp -ServerInstance W1_NAVBC_CU5 -Name DataMigrationArticleUpgradeCodeunit

- Next, uninstall the extension and publish it a higher version extension;

- NOTE: This step is very important. BC will only do the data upgrade when you install a higher version of the upgrade extension. So at this moment update the extension version to a higher version, create a new package and publish the new version.*

□ Uninstall-NAVApp -ServerInstance W1_NAVBC_CU5 -Name DataMigrationArticleUpgradeCodeunit

□ Publish-NAVApp -ServerInstance W1_NAVBC_CU5 -Path “C:\…\AL\DataMigrationArticleUpgradeCodeunit\Default publisher_DataMigrationArticleUpgradeCodeunit_2.0.0.0.app” -SkipVerification

NOTE: Please note that I’ve just published the new extension version using PowershelI, i didn’t install it. I wasn’t able to do the data upgrade using Powershell so please do the following: after publishing the new extension version, open Extensions Management menu in RTC and install the new extension version there.

At this moment the upgrade codeunit is executed and data is migrated;

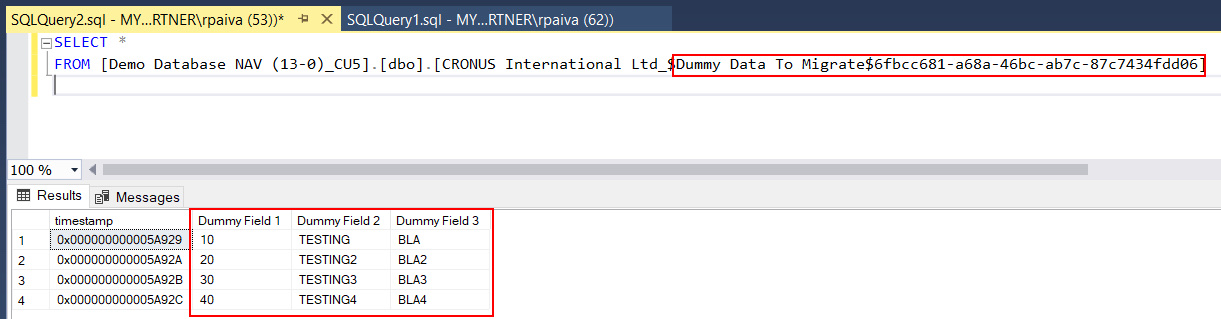

- To check if data was migrated you can use a SQL query:

Et voilá, the data is migrated 😉

- You can now uninstall and unpublish the data upgrade extension and delete tables 70000 and 70001 in C/SIDE.

Feel free to leave your comments or ask me questions.

Hello,

Thanks for your post.

I have a question to upgrade data from some fields in standard table to a table extension ?

What is the process ? the same process with tables with different field range id ?

Hi Steven,

Thanks for your comment.

Yes, the process is basically the same.

If the custom field id or name in the standard table is the same in the table extension then you must create a “temporary” table to store the data from the fields to be migrated.

This “temporary” table must have the standard table key fields and the fields to be migrated.

To copy the data you must create an upgrade codeunit (see step 3). After creating the upgrade codeunit you can replace the customized standard table with the standard object. The custom fields data will be moved to the “temporary” table in the table importing step.

After that you must create an upgrade codeunit in AL to migrate the data from the temporary table to the table extension fields (see steps 10 to 12).

Please let me know if you need further help.

Hello Thanks for your post.

I follow the steps as you described but stuck on this step 2) VSCode cannot find “Temp Dummy Data To Migrate”. I am not able to see CAL custom table in AL project. I follow this link https://docs.microsoft.com/en-us/dynamics365/business-central/dev-itpro/developer/devenv-running-cside-and-al-side-by-side

But When i run this command after changes database and server name:

finsql.exe Command=generatesymbolreference, Database=”Demo Database NAV (11-0)”, ServerName=.\NAVDEMO

It left this file navcommandresult.txt with following error for tables.

[Table Temp Ab Test Table]: Could not load file or assembly ‘Txt2AlConverter, Version=14.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35’ or one of its dependencies. The system cannot find the file specified.

Can you please guide me how to resolve this issue.

Thanks

Hi Abrar,

There seems to be some problem with your BC installation.

Txt2AlConverter is a dll file that is located in your Roletailored Client folder.

C:\Program Files (x86)\Microsoft Dynamics 365 Business Central\140\RoleTailored Client\Txt2AlConverter.dll

Can you confirm that the file exists in BC installation folder?

Did you install BC or did you just copied the folder to your pc?

Hi Ricardo,

Yes that file exist in this folder.

I installed BC and its completed without any error.

Hi again Abrar,

There seems to be some problem with your BC installation.

Maybe some permissions missing during BC installation.

Please try to register the file in global cache assembly manually.

To do that, open Command Prompt as administrator.

Go to folder: C:\Program Files (x86)\Microsoft SDKs\Windows\v10.0A\bin\NETFX 4.6.1 Tools\x64

If you can’t find it is this folder, look for a folder with another NETFX version.

Then run the following command:

gacutil /i “c:\Program Files (x86)\Microsoft Dynamics 365 Business Central\140\RoleTailored Client\Txt2AlConverter.dll”

If it was registered successfully you should get the following message: Assembly successfully added to the cache

Close the Command Prompt and try to generate the symbol references.

Let me hear from you.

Hi Ricardo,

Thanks for response. this command works for me.

Thanks.

Hi Abrar, I’m glad to hear that. Thanks for your reply.

I have question need your help

when we upgrade data from some fields in standard table to a table extension ?

you said we must must create a “temporary” table to store the data from the fields to be migrated.

it it correct that we only create temp table to store custom filed ID ??

when we use code unit (step 3), it will more all data to temp table, or only data for custom field ?

Hi,

Thanks for your question. It’s a good one!

The “temporary” table is only needed if you are extending the standard table and the table extension fields do have the same id and/or name.

There will be a conflict and that’s the reason why you have to copy/move that data to another table, then remove those fields from standard tables, install the extension and move the data from the “temporary” tables to the fields in table extensions.

If the fields in table extension don’t have the same id and/or name, then you don’t need to do all these steps. You just need to install the extension and run the data upgrade codeunit (step 10). And them remove the custom fields from standard tables. In this case you won’t be able to use TRANSFERFIELDS and you must assign the fields one by one, in the data upgrade codeunit, because fields id do not match. In my article I show you both ways.

Regarding the temp table, you are right. If you need to create it, it will only have the standard table key fields and the custom fields that you want to migrate to extensions.

I hope this answer your question.

Kind regards,

Ricardo Paiva Moinhos

My current version is BC 13 since (but all obeject still in 50000 objects with old NAV DEV developement )

1- Backup database

2. Convert all custom object C/Sides to C/AL Code , (i will use fornav.com o convert and it is easy https://www.fornav.com/ )

3. – with Table in 50000 Range . We use your solution with Create Temp table , upgrade Codeunit to migrate Data to table 70000(migrate all data )

– with Standard table with customer field ( I mean standard table but we add more field 500000 )

+ We also need to create temp table but only temp table with – standard table key fields and the custom fields that I want to migrate to extensions.

+(for ex: I have table 18 , Customer with to new customer field 50000(name3), 50001(address3) then I create Temp table with only 3 fields , 1. Customer no (as key), field 5000(name3), field 50001(address3))

+ use upgrade code unit to migrate data to temp table but only 3 fields

4. Manual delete all custom objects (I mean all objects 50000). and go to each standard table and delete custom field (field 5000)

(I have to go to each custom field one by one to delete old custom field and object 50000? right ?)

5. Publish Code AL to Extension to Server and install extension(so all Custom table and customer field will be there)

6. migrate data from old C/SIDE to new Extension AL .use your step 10

I got a bit stuck of step Upgrade code unit

on your instruction , you instruct how to migrate whole data from a table for table

in case I have custom fields on Standard table , can upgrade code unit migrate only necessary data . I mean only data on customer field need to be migrate NOT all standard table data.

tks a lot and have great day

Hi Khoi,

You are right. In my article I only refer how to migrate custom tables. I don’t refer how to move data from custom fields in standard tables. i’ll add that when I have time.

The data migration is not a straight process.

To move data from custom fields in standard tables to new table extensions in AL, considering that the field id or names will be the same, you will need to create a data migration codeunit in C/AL to move data to the “temporary” tables.

What does that changes in my article steps?

Well, in step 5 you won’t delete the standard tables. Instead you must design and delete the custom fields or replace those tables with the standard object.

If you try to compile and do the synchronization choosing the option With Validation, you won’t get an error because you’ve created the data migration codeunit and NAV will use it to move data to the “temporary” tables before doing the table sync and removing the custom fields from SQL.

If you get an error and you want to check in which tables and fields you’re having conflicts, you must do the sync using Powershell command Sync-NavTenant. The development environment do not give you that information.

After the sync runs successfully, you may run a SELECT query in SQL to make sure that the “temporary” tables has the data.

Now, to move the data from the “temporary” tables to AL, just use the data migration codeunit created in AL. It’s the same process but you will only migrate the custom fields.

Hope this helps you.

Please let me know if you need further help.

Good afternoon

This post is very interesting and helpful.

But when I run the command finsql.exe generatesymbolreference=yes, the Dev. environnement opens. and when I try to compile my temp tables, the system crashes with this message : An unhandled Microsoft. framework exception occurs. in finsql.exe …. uncheck just-in time debugging ….. Do you have an Idea why this happen? Thanks a lot

Hi,

Thank you for your message.

Well, it’s hard to understand the reason why it is crashing but…

Which NAV/BC version/cumulative update are you using? Is it the last cumulative update available?

Did you take a look at Event Viewer? Probably there’s some event with the error details.

Hi,

I have a field of type option which is customized to include new option members.

How do I migrate data from this field?

Hi Galina,

Thank you for your email.

You should be able to migrate the data without major issues by using a upgrade codeunit.

On the temporary table you have to create the field of type Option to migrate the data from the source table to the temporary table.

Then you can use codeunit 50100 in my example to copy the data from the temporary table to the final table.

You can use TransferFields if you have that exactly same field on the final table. If the table structure have changed then you might need to change the code to reflect those changes and to move the data from the temporary table to the final table.

Hi Ricardo, Thanks for sharing this very useful information.

I’m trying to upgrade from a customized C/AL BC14 to BC170 using https://docs.microsoft.com/en-us/dynamics365/business-central/dev-itpro/upgrade/upgrade-to-microsoft-base-app-v17.

I have completed the technical migration and I have my customized AL extensions. One of them is a country localization delivered by a 3rd party. Now I’m a bit stuck on which way I should follow. I can take advantage of this to put my data in extensions, still in BC14, right?

And then how should I proceed with the upgrade to 170? I’m very confused, if you could give some headlights on how to proceed with this upgrade I appreciate.

Thanks a lot

Hi Suriraj,

Thanks for your comment.

Please note that despite this article may still be valid and working, I would advise to follow the Microsoft new guidelines and use the new approach they recommend on the link you just sent. The main reason is that you have documentation about it so you should have be able to follow it.

This is a good overview on how it works: https://docs.microsoft.com/en-us/dynamics365/business-central/dev-itpro/upgrade/upgrade-to-microsoft-base-app-v17#overview

Basically you have to create a new so-called migration extension with all the custom fields (in standard and custom – own and 3rd party tables) and use the DestinationAppsForMigration server setting to move all the data from C/AL tables to the migration extension tables.

More info: https://docs.microsoft.com/en-us/dynamics365/business-central/dev-itpro/upgrade/upgrade-destinationappsformigration

Regarding the 3rd party, usually the partner has migration toolkit to help on the migration so you should try to talk to the company that provided the software and ask them for that.

After moving the data to the migration extension, you have to move the data to each of the final extensions.

More info:

https://docs.microsoft.com/en-us/dynamics365/business-central/dev-itpro/developer/devenv-migrate-table-fields-down

https://docs.microsoft.com/en-us/dynamics365/business-central/dev-itpro/developer/devenv-migrate-table-fields

I haven’t done an BC upgrade for quite some time so I’ve never used this new method. After a quick read on the documentation, it seems like it’s kind of a confusing process and you may struggle a little bit but… once you’ve done once, you’re ready for more.

If you want to try to use “my method” and use data migration codeunits to migrate data to C/AL temporary tables still on BC 14 and then move the data to AL extensions, that should still work as well.

I think you’ll need to skip the following steps on the Microsoft upgrade tutorial: Step 7, DestinationAppsForMigrations part in Step 8 and Step 10 to Step 15.

Good luck and let me know which method you decide to use to do the upgrade process.

Hi Ricardo,

This posting is very useful for data upgrade. Thank you for sharing this information.

i tried your steps to data upgrade in bc14. After step 12, i got message like ‘ The xxxxxx extension was successfully installed. All active user must log out and log in again to see the navigation changes.

But when i try to check from SQL(step 13). those tables are not available. How can we check those data? it seems something is wrong.

Hi Latha,

Thank you for your comment.

If the tables are not there then there might be something wrong with the extension installation or you’re not looking at the right place.

Please note that the tables on SQL Server should be created when you publish and install the extension with the tables (step 8 and 9) and the table will be named$

In my case i have:

– Company Name = Cronus International Ltd_

– Table name = Dummy Data to Migrate

– Extension Id = 6fbcc681-…

So that’s why the table is named “Cronus International Ltd_$Dummy Data to Migrate$6fbcc681-…

How did you look at the SQL tables? You run the same script as I did?

Did you update it accordingly?

Can you try to search for the table on the Object Explorer instead?

Maybe you miss the tables because you’re looking at the wrong place.

Please let me hear from you.

Hi Ricardo,

Thank you for your reply.

once again, I repeated your steps from initially.

It is working fine now and all the data were moved to extension tables.

Really thank you so much for sharing this wonderful information.

Could you please share your valuable ideas to upgrade table data from BC14 to higher-version like 160/170/180. that will be more helpful to proceed further upgrade.

This is a brilliant article, thank you !!! I’ve spent a long time wondering how to do this …

Hi Chris,

Thank you for your comment. I’m really glad that this post could help you.

Please note that this post is a bit old now.

If you’re following Microsoft guidelines, on how to migrate from BC 14 to BC 15 or later, using table migration extensions (see here: Upgrading Customized C/AL Application to Microsoft Base Application version 21), then as long as the table and field name is the same, you don’t need to handle data migration and use upgrade codeunit.

This is only necessary if there are breaking changes in tables/fields name/type.

I’ve started a new post around that subject but didn’t had time to finish it. Hope to do it sooner than later.

If you need further assistance, do not hesitate.

Take care!